The Evolution of Human-AI Collaboration: From Teaching Machines to Learning Together

- Puneet Tyagi

- Global Head - AI Data Services, Qualitest

-

Jul 15, 20254 min read

This prescient observation by computer science pioneer Edsger Dijkstra captures the essence of how our understanding of artificial intelligence has evolved. We’ve moved past wondering whether machines can “think.”

The real question today is: how can machines and humans learn and evolve together?

From the earliest days of computing, the relationship between humans and machines was one-directional. Humans programmed machines, issuing commands and defining exact rules.

Today, we live in a radically different world where machines are increasingly learning to interpret, respond to, and even anticipate human needs with nuance, creativity, and contextual understanding.

For decades, the traditional paradigm in computing was rooted in explicit instruction: humans coded, and machines executed. These systems were deterministic, logic-driven, and largely inflexible. But with the advent of deep learning, we witnessed a profound shift.

Breakthroughs like AlphaZero, which mastered complex games through self-play and reinforcement learning, and the emergence of powerful language models like GPT, revealed a new possibility: machines could now learn patterns, strategies, and even seemingly intuitive behaviors without being explicitly programmed.

They began not just responding to commands, but engaging with us, understanding context, tone, and intent.

This shift marked a key inflection point in the evolution of human-AI collaboration.

Despite these advancements, replicating true human-like intelligence remains an unsolved challenge. Generating coherent text or mimicking conversation is not the same as understanding ethics, cultural norms, or emotional nuance.

Take the example of night vision systems used in gaming versus military contexts. The underlying technology may be similar, but the stakes, safety requirements, and usage environments differ dramatically.

AI must learn to contextualize. To adapt the same functionality differently, depending on the human goal and environment. Current models, while impressive, often lack this depth of situational understanding.

The key to narrowing this gap lies not in more data alone, but in better data. One guided by diverse human experiences and domain-specific expertise.

Experts, not just annotators

Humans possess tacit knowledge. They are equipped with insights gained through experience, cultural immersion, and ethical reasoning, that machines can’t infer from raw data.

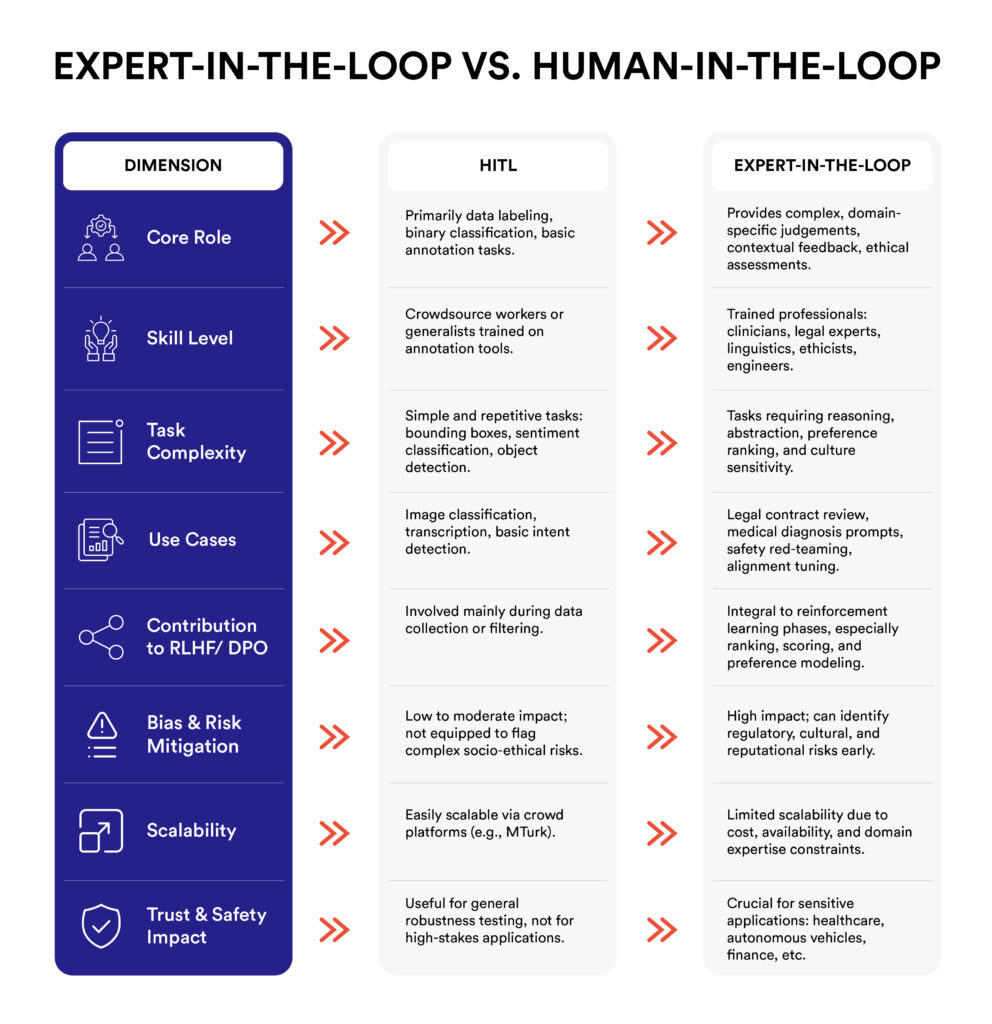

This makes Expert-in-the-Loop (EITL) approaches essential. We must go beyond traditional “human-in-the-loop” labeling to involve specialists who can inform and shape the AI’s learning.

This diversity enriches the training process with the kind of nuance machines can’t learn on their own. However, sourcing expert workforces with the right diversity, scale, and domain-specific skill sets remains a significant challenge for the industry. Building such teams requires deliberate effort, collaboration, and investment across disciplines and geographies.

Building trustworthy AI systems means creating infrastructure with the right incentives, scale, and quality to enable human experts to predictably collaborate with machines throughout development, not just during final evaluation.

Modern GenAI pipelines now increasingly rely on:

These systems are not just about managing workflows. They’re about enabling insight to travel both ways between human and machine.

Bringing experts into the loop is not a cost overhead. It’s a strategic investment. Organizations that invest in expert-driven AI development gain:

In a world where AI systems are increasingly embedded in legal, medical, financial, and social domains, these advantages are not just desirable, they’re mission-critical.

Conclusively, as GenAI continues to redefine what’s possible, the real winners will not be those with the biggest models, but those who understand the irreplaceable value of human insight and build systems that learn, adapt, and evolve with it.